aws搭建深度学习gpu

A decade ago, if you wanted access to a GPU to accelerate your data processing or scientific simulation code, you’d either have to get hold of a PC gamer or contact your friendly neighborhood supercomputing center. Today, you can log on to your AWS console and choose from a range of GPU based Amazon EC2 instances.

十年前,如果您想使用GPU来加速数据处理或科学模拟代码,则要么必须拥有PC游戏玩家,要么要与您友好的邻里超级计算中心联系。 今天,您可以登录到AWS控制台,并从一系列基于GPU的Amazon EC2实例中进行选择。

You can launch GPU instances with different GPU memory sizes (8 GB, 16 GB, 32 GB), NVIDIA GPU architectures (Turing, Volta, Maxwell, Kepler) different capabilities (FP64, FP32, FP16, INT8, TensorCores, NVLink) and number of GPUs per instance (1, 2, 4, 8, 16). You can also select instances with different numbers of vCPUs, system memory and network bandwidth and add a range of storage options (object storage, network file systems, block storage, etc.).

您可以启动具有不同GPU内存大小(8 GB,16 GB,32 GB),NVIDIA GPU架构(Turing,Volta,Maxwell,Kepler)不同功能(FP64,FP32,FP16,INT8,TensorCores,NVLink)和数量的GPU实例每个实例(1、2、4、8、16)个GPU的数量。 您还可以选择具有不同数量的vCPU,系统内存和网络带宽的实例,并添加一系列存储选项(对象存储,网络文件系统,块存储等)。

Options are always a good thing, provided you know what to choose when. My goal in writing this article is to provide you with some guidance on how you can choose the right GPU instance on AWS for your deep learning projects. I’ll discuss key features and benefits of various EC2 GPU instances, and workloads that are best suited for each instance type and size. If you’re new to AWS, or new to GPUs, or new to deep learning, my hope is that you’ll find the information you need to make the right choice for your projects.

只要您知道什么时候选择,选择总是一件好事。 我写本文的目的是为您提供一些指导,说明如何在AWS上为您的深度学习项目选择正确的GPU实例。 我将讨论各种EC2 GPU实例的关键功能和优势,以及最适合每种实例类型和大小的工作负载。 如果您是AWS的新手,GPU的新手或深度学习的新手,我希望您能找到所需的信息,以便为您的项目做出正确的选择。

TL;DR — scroll all the way to the bottom

TL; DR —一直滚动到底部

为什么您应该选择正确的GPU实例,而不仅仅是正确的GPU (Why you should choose the right GPU instance, not just the right GPU)

A GPU is the workhorse of a deep learning system, but the best deep learning system is more than just a GPU. You have to choose the right amount of compute power (CPUs, GPUs), storage, networking bandwidth and optimized software that can maximize utilization of all available resources.

GPU是深度学习系统的主力军,但最好的深度学习系统不仅仅是GPU。 您必须选择适当数量的计算能力(CPU,GPU),存储,网络带宽和优化的软件,以最大程度地利用所有可用资源。

Some deep learning models need higher system memory or a more powerful CPU for data pre-processing, others may run fine with fewer CPU cores and lower system memory. This is why you’ll see many Amazon EC2 GPU instances options, some with the same GPU type but different CPU, storage and networking options.

一些深度学习模型需要更高的系统内存或更强大的CPU来进行数据预处理,而其他一些深度学习模型可能需要更少的CPU内核和更低的系统内存才能正常运行。 这就是为什么您会看到许多Amazon EC2 GPU实例选项的原因,有些选项具有相同的GPU类型,但CPU,存储和网络选项不同。

If you’re new to AWS or new to deep learning on AWS, making this choice can feel overwhelming, but you are here now, and I’m going to guide you through the process.

如果您是AWS的新手或AWS深度学习的新手,那么做出这样的选择可能会让人感到不知所措,但是现在您来了,我将指导您完成整个过程。

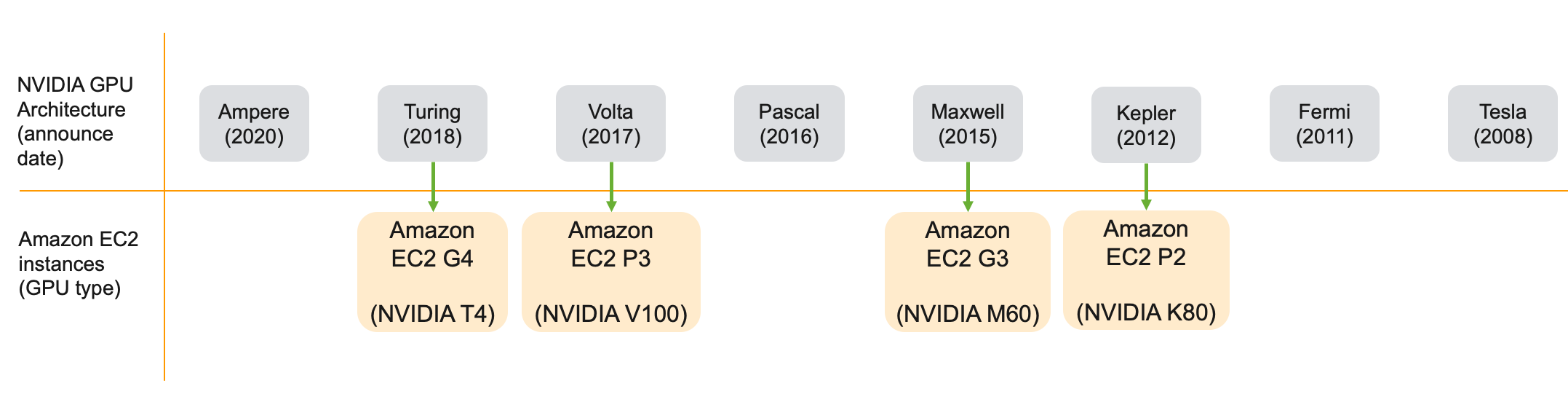

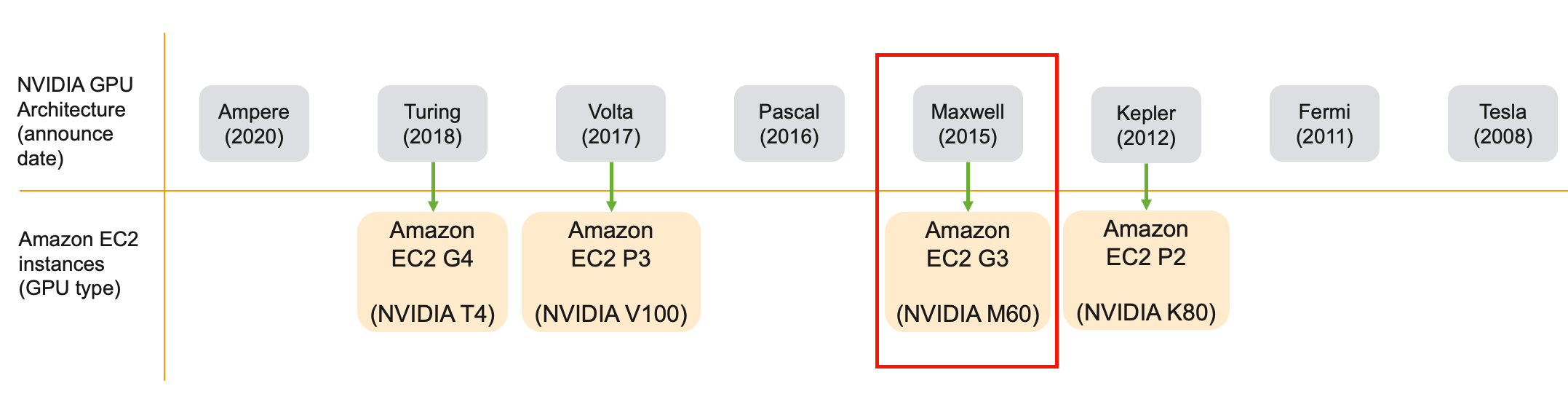

On AWS, you have access to two families of GPU instances — the P family and the G family of EC2 instances. Different generations under P family (P3, P2) and G family (G4, G3) instances are based on different generations of GPUs architecture as shown below.

在AWS上,您可以访问两个GPU实例系列-P系列和G系列EC2实例。 P系列(P3,P2)和G系列(G4,G3)实例下的不同世代基于不同世代的GPU架构,如下所示。

Each instance family (P and G), includes instance types (P2, P3, G3, G4), and each instance type includes instances with different sizes. Each instance size has a certain vCPU count, GPU memory, system memory, GPUs per instance, and network bandwidth. A full list of all available options is shown in the diagram below.

每个实例族(P和G)都包括实例类型(P2,P3,G3,G4),并且每个实例类型都包括具有不同大小的实例。 每个实例大小都有一定的vCPU数量,GPU内存,系统内存,每个实例的GPU和网络带宽。 下图显示了所有可用选项的完整列表。

Now let’s take a look at each of these instances by family, generation and sizes.

现在,让我们按族,代和大小来查看每个实例。

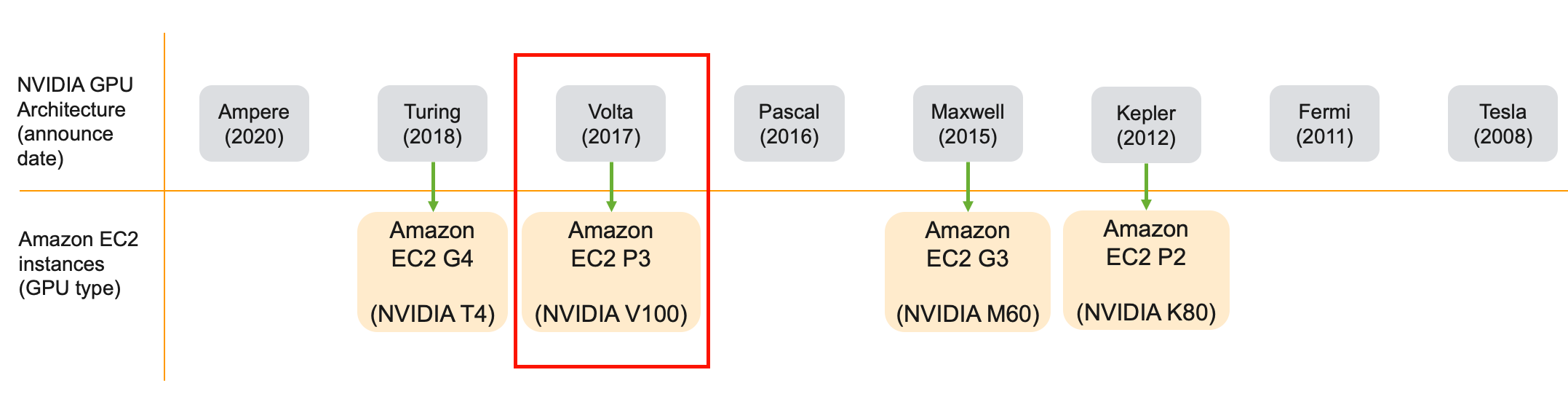

Amazon EC2 P3: 高性能深度学习培训的最佳实例 (Amazon EC2 P3: Best instance for high-performance deep learning training)

P3 instances provide access to NVIDIA V100 GPUs based on NVIDIA Volta architecture and you can launch a single GPU per instance or multiple GPUs per instance (4 GPUs, 8 GPUs). A single GPU instance p3.2xlarge can be your daily driver for deep learning training. And the most capable instance p3dn.24xlarge gives you access to 8 x V100 with 32 GB GPU memory, 96 vCPUs, 100 Gbps networking throughput for record setting training performance.

P3实例提供对基于NVIDIA Volta架构的NVIDIA V100 GPU的访问,您可以为每个实例启动一个GPU或为每个实例启动多个GPU(4个GPU,8个GPU)。 单个GPU实例p3.2xlarge可以成为您进行深度学习培训的日常驱动程序。 最强大的实例p3dn.24xlarge使您可以访问具有32 GB GPU内存,96个vCPU,100 Gbps网络吞吐量的8 x V100,以提供创纪录的培训性能。

P3 instance features at a glance:

P3实例功能一目了然:

-

GPU Generation: NVIDIA Volta

GPU一代 :NVIDIA Volta

-

Supported precision types: FP64, FP32, FP16, Tensor Cores (mixed-precision)

支持的精度类型 :FP64,FP32,FP16,Tensor Core(混合精度)

-

GPU memory: 16 GB, 32 GB only on p3dn.24xlarge

GPU内存 :16 GB,仅p3dn.24xlarge上为32 GB

-

GPU interconnect: NVLink high-bandwidth interconnect, 2nd generation

GPU互连 :NVLink高带宽互连,第二代

The NVIDIA V100 includes special cores for deep learning called Tensor Cores to run mixed-precision training. Rather than training the model in single precision (FP32), your deep learning framework can use Tensor Cores to perform matrix multiplication in half-precision (FP16) and accumulate in single precision (FP32). This often requires updating your training scripts, but can lead to much higher training performance. Each framework handles this differently, so refer to your framework’s official guides (TensorFlow, PyTorch and MXNet) for using mixed-precision.

NVIDIA V100包含用于深度学习的特殊核心(称为Tensor核心)以运行混合精度培训。 您的深度学习框架无需使用单精度(FP32)训练模型,而可以使用Tensor Cores以半精度(FP16)执行矩阵乘法并以单精度(FP32)进行累加。 这通常需要更新您的训练脚本,但是可以带来更高的训练效果。 每个框架的处理方式都不相同,因此请参阅框架的官方指南( TensorFlow , PyTorch和MXNet )以使用混合精度。

P3 instances come in 4 different sizes: p3.2xlarge, p3.8xlarge, p3.16xlarge and p3dn.24xlarge.Let’s take a look at each of them.

P3实例有4种不同的大小: p3.2xlarge , p3.8xlarge , p3.16xlarge和p3dn。 24xlarge. 让我们看看它们中的每一个。

p3.2xlarge:用于单GPU训练的最佳GPU实例 (p3.2xlarge: Best GPU instance for single GPU training)

This should be your go-to instance for most of your deep learning training work. You get access to one NVIDIA V100 GPU with 16 GB GPU memory, 8 vCPUs, 61 GB system memory and up to 10 Gbps network bandwidth. V100 is the fastest GPU available in the cloud at the time of this writing and supports Tensor Cores that can further improve performance if your scripts can take advantage of mixed-precision training.

这应该是您大部分深度学习培训工作的首选实例。 您可以访问一个具有16 GB GPU内存,8个vCPU,61 GB系统内存和高达10 Gbps网络带宽的NVIDIA V100 GPU。 在撰写本文时,V100是云中可用的最快的GPU,并且支持Tensor Core,如果您的脚本可以利用混合精度培训,它们可以进一步提高性能。

You can provision this instance using Amazon EC2, Amazon SageMaker Notebook instances or submit a training job to an Amazon SageMaker managed instance using the SageMaker Python SDK. If you spin up an Amazon EC2 p3.2xlarge instance and run the nvidia-smi command you can see that the GPU on the instances is a V100-SXM2 version which supports NVLink (we’ll discuss this in the next section). Under Memory-Usage you’ll see that it has 16 GB GPU memory. If you need more than 16 GB of GPU memory for large models or large data sizes then you should consider p3dn.24xlarge (more details below).

您可以使用Amazon EC2,Amazon SageMaker Notebook实例设置该实例,或者使用SageMaker Python SDK将培训作业提交到Amazon SageMaker托管实例。 如果启动Amazon EC2 p3.2xlarge实例并运行nvidia-smi命令,您会看到实例上的GPU是支持NVLink的V100-SXM2版本(我们将在下一节中讨论)。 在“内存使用情况”下,您将看到它具有16 GB的GPU内存。 如果大型模型或大数据量需要超过16 GB的GPU内存,则应考虑使用p3dn.24xlarge (更多详细信息如下)。

p3.8xlarge和p3.16xlarge:适用于小规模多GPU训练和运行并行实验的理想GPU实例 (p3.8xlarge and p3.16xlarge: Ideal GPU instance for small-scale multi-GPU training and running parallel experiments)

If you need more GPUs for experimenting, more vCPUs for data pre-processing and data augmentation, or higher network bandwidth consider p3.8xlarge (with 4 GPUs) and p3.16xlarge (with 4 GPUs). Each GPU is an NVIDIA V100 with 16 GB memory. They also include NVLink interconnect for high-bandwidth between-GPU communication which will come in handy for multi-GPU training. With p3.8xlarge you get access to 32 vCPUs and 244 GB system memory, and with p3.16xlarge you get access to 64 vCPUs and 488 GB system memory. This instance is ideal for couple of use cases:

如果您需要更多的GPU进行实验,更多的vCPU进行数据预处理和数据扩充,或者需要更高的网络带宽,请考虑使用p3.8xlarge (使用4个GPU)和p3.16xlarge (使用4个GPU)。 每个GPU是具有16 GB内存的NVIDIA V100。 它们还包括用于在GPU之间进行高带宽通信的NVLink互连,这将在多GPU训练中派上用场。 使用p3.8xlarge可以访问32个vCPU和244 GB系统内存,而使用p3.16xlarge可以访问64个vCPU和488 GB系统内存。 该实例非常适合几个用例:

Multi-GPU training jobs: If you’re just getting started with multi-GPU training, 4 GPUs on p3.8xlarge or 8 GPUs on a p3.16xlarge can give you a nice speedup. You can also use this instance to prepare your training scripts for much larger-scale multi-node training jobs, which often require you to modify your training scripts using libraries like Horovod, tf.distribute.Strategy or torch.distributed. Refer to my step-by-step guide to using Horovod for distributed training:

多GPU培训工作 :如果您刚刚开始多GPU培训, p3.8xlarge上的4个GPU或p3.16xlarge上的8个GPU可以为您提供不错的加速效果。 您还可以使用此实例为更大规模的多节点训练工作准备训练脚本,这通常需要您使用Horovod , tf.distribute.Strategy或torch.distributed之 类的库来修改训练脚本。 请参阅我的使用Horovod进行分布式培训的分步指南:

Blog post: A quick guide to distributed training with TensorFlow and Horovod on Amazon SageMaker

博客文章: 在Amazon SageMaker上使用TensorFlow和Horovod进行分布式培训的快速指南

Parallel experiments: Multi-GPU instances also come in handy when you have to run variations of your model architecture and hyperparameters in parallel, to experiment faster. With p3.16xlarge you can run training on up to 8 variants of your model. Unlike multi-GPU training jobs, since each GPU is running training independently and doesn’t block the use of other GPUs, you can be more productive during the model exploration phase.

并行实验 :当您必须并行运行模型架构和超参数的变体以更快地进行实验时,多GPU实例也很方便。 使用p3.16xlarge您可以对模型的多达8个变体进行训练。 与多GPU训练作业不同,由于每个GPU都独立运行训练,并且不会阻止其他GPU的使用,因此在模型探索阶段可以提高工作效率。

p3dn.24xlarge:云中最快的GPU实例 (p3dn.24xlarge: Fastest GPU instance in the cloud)

If you need the absolutely fastest training GPU instance in the cloud then look no further than the p3dn.24xlarge. You get access to 8 NVIDIA V100 GPUs, but unlike p3.16xlarge which have 16 GB of GPU memory, the GPUs on p3dn.24xlarge have 32 GB GPU memory. This means you can fit much larger models and train on much larger batch sizes. The instance gives you access to 96 vCPUs, 768 GB system memory and 100 Gbps network bandwidth which becomes important for achieving near-linear scaling for large-scale distributed training jobs.

如果您需要在云中绝对最快的训练GPU实例,那么p3dn.24xlarge就是您的p3dn.24xlarge 。 您可以访问8个NVIDIA GPU的V100,但不像p3.16xlarge具有16 GB内存的GPU中,对GPU的p3dn.24xlarge有32 GB GPU内存。 这意味着您可以适应更大的型号并以更大的批量进行训练。 通过该实例,您可以访问96个vCPU,768 GB系统内存和100 Gbps网络带宽,这对于实现大规模分布式培训作业的近乎线性扩展至关重要。

Run nvidia-smi on this instance and you can see that the GPU memory is 32 GB. This is the largest GPU memory per GPU, you’ll find on AWS today. If your models are large or you’re working on 3D images or other large data batches, then this is the instance to consider. Run nvidia-smi topo — matrix and you’ll see that NVLink is used for between-GPU communication. NVlink provides much higher inter-GPU bandwidth compared to PCIe and this means that multi-GPU and distributed training jobs will run much faster.

在此实例上运行nvidia-smi ,您可以看到GPU内存为32 GB。 这是每个GPU上最大的GPU内存,您今天可以在AWS上找到它。 如果您的模型很大,或者您正在处理3D图像或其他大数据批次,那么这是要考虑的实例。 运行nvidia-smi topo — matrix ,您将看到NVLink用于GPU之间的通信。 与PCIe相比,NVlink提供了更高的GPU间带宽,这意味着多GPU和分布式培训作业将运行得更快。

The AWS web page includes some benchmark claims, and this includes record setting performance numbers for Mask R-CNN and BERT training:

AWS网页包含一些基准声明,其中包括Mask R-CNN和BERT培训的记录设置性能数字:

- 27 mins to train Mask R-CNN with 24 p3dn.24xlarge (192 GPUs)

27分钟训练24 p3dn.24xlarge(192 GPU)的Mask R-CNN

- 62 mins to train BERT with 256 p3dn.24xlarge (2048 GPUs)

62分钟训练256 p3dn.24xlarge(2048 GPU)的BERT

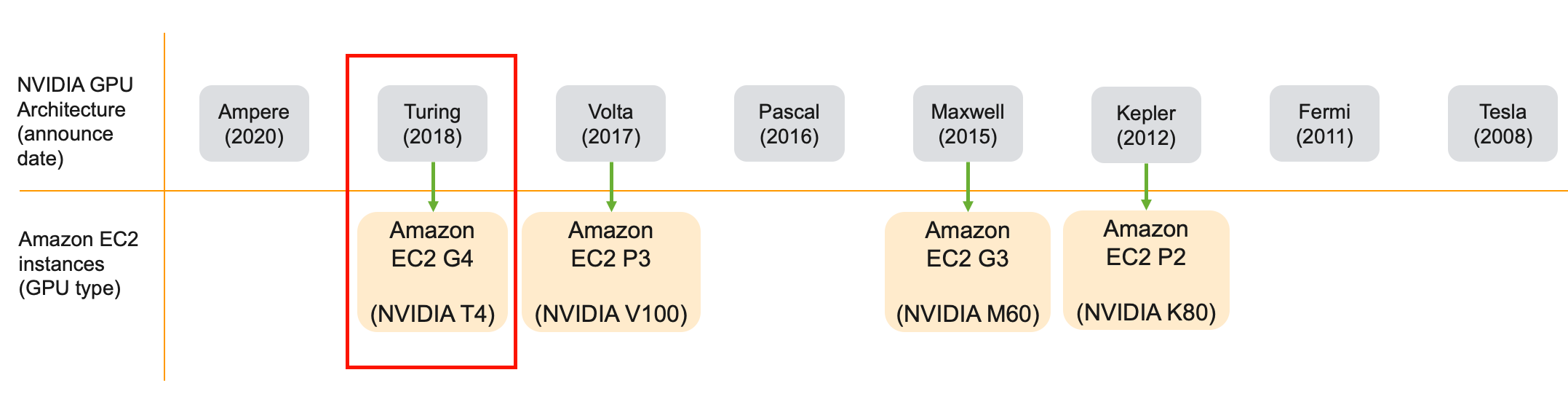

Amazon EC2 G4:经济高效的深度学习培训和高性能推理部署的最佳实例 (Amazon EC2 G4: Best instance for cost-efficient deep learning training, and high-performance inference deployments)

G4 instances provide access to NVIDIA T4 GPUs based on NVIDIA Turing architecture. You can launch a single GPU per instance or multiple GPUs per instance (4 GPUs, 8 GPUs).

G4实例提供对基于NVIDIA Turing架构的NVIDIA T4 GPU的访问。 您可以为每个实例启动一个GPU或为每个实例启动多个GPU(4个GPU,8个GPU)。

NVIDIA Turing architecture came after the NVIDIA Volta architecture and introduced several new features for machine learning like the next generation Tensor Cores and integer precision support. However the NVIDIA T4 GPU’s primary use-case is inference deployments and graphics, and are much lower powered than the NVIDIA V100 GPU available in a P3 instance. P3 instances should still be your go-to instance for high performance training.

NVIDIA Turing架构紧随NVIDIA Volta架构之后,引入了一些机器学习新功能,例如下一代Tensor Core和整数精度支持。 但是,NVIDIA T4 GPU的主要用例是推理部署和图形,并且其功耗比P3实例中可用的NVIDIA V100 GPU低得多。 P3实例仍然应该是您进行高性能培训的首选实例。

G4 instance features at a glance:

G4实例功能一目了然:

-

GPU Generation: NVIDIA Turing

GPU产生 :NVIDIA Turing

-

Supported precision types: FP64, FP32, FP16, Tensor Cores (mixed-precision), INT8, INT4, INT1

支持的精度类型 :FP64,FP32,FP16,张量核心(混合精度),INT8,INT4,INT1

-

GPU memory: 16 GB

GPU记忆体 :16 GB

-

GPU interconnect: PCIe

GPU互连 :PCIe

What’s unique about NVIDIA T4 is it’s support for integer precision (INT8) data type, that can significantly accelerate inference throughput. During training, model weights and gradients are typically stored in single precision (FP32). As it turns out, to run predictions on a trained model, you don’t actually need full precision, and you can get away with reduced precision calculations in either half precision (FP16) or 8 bit integer precision (INT8). Doing so gives you a boost in throughput, without sacrificing too much accuracy. There will be a some drop in accuracy, and how much depends on various factors specific to your model and training. Overall, you get the best inference performance/cost with G4 instances compared to other GPU instances. NVIDIA’s support matrix shows what neural network layers and GPU types support INT8 and other precision for inference.

NVIDIA T4的独特之处在于它支持整数精度(INT8)数据类型,可以显着提高推理吞吐量。 在训练期间,模型权重和梯度通常以单精度(FP32)存储。 事实证明,要在经过训练的模型上运行预测,实际上并不需要全精度,并且可以避免采用半精度(FP16)或8位整数精度(INT8)的降低精度的计算。 这样做可以提高吞吐量,而不会牺牲太多的准确性。 准确性会有所下降,具体取决于模型和训练的各种因素。 总体而言,与其他GPU实例相比,使用G4实例可获得最佳的推理性能/成本。 NVIDIA的支持矩阵显示了哪些神经网络层和GPU类型支持INT8和其他推理精度。

Ok, G4 instances are great for inference, can I use them for training?Yes! g4dn.xlarge should be your go-to instance type for development, testing, prototyping and small model training. However, you should note that although the NVIDIA T4 GPU in G4 instances are based on the most recent Turning architecture, T4 is a lower powered GPU compared to P3’s V100. Run nvidia-smi on this instance and you can see that the g4dn.xlarge has a NVIDIA T4 GPU with 16 GB of GPU memory. You’ll also notice that the power cap is 70W. This tells you that this is a much lower powered GPU than the V100 GPU in P3 instances which has a power cap of 300W.

好的,G4实例非常适合推理,我可以使用它们进行训练吗? 是! g4dn.xlarge应该是您进行开发,测试,原型设计和小模型培训的g4dn.xlarge实例类型。 但是,您应该注意,尽管G4实例中的NVIDIA T4 GPU基于最新的Turning架构,但与P3的V100相比,T4是功耗更低的GPU。 在此实例上运行nvidia-smi ,您会看到g4dn.xlarge具有NVIDIA T4 GPU和16 GB的GPU内存。 您还会注意到,功率上限为70W。 这告诉您,与功率限制为300W的P3实例中的V100 GPU相比,此GPU的功耗要低得多。

If you take a look at NVIDIA’s data sheet for NVIDIA T4 and NVIDIA V100, you can confirm that the T4 is rated at TDP 70 W, has 2560 NVIDIA CUDA Cores and 320 NVIDIA Turing Tensor Cores. NVIDIA V100 is rated at TDP 300 W, has 5120 NVIDIA CUDA Cores and 640 NVIDIA Volta Tensor Cores.

如果查看NVIDIA的NVIDIA T4和NVIDIA V100数据表,可以确认T4的额定功率为TDP 70 W,具有2560 NVIDIA CUDA内核和320 NVIDIA Turing Tensor内核。 NVIDIA V100的额定TDP为300 W,具有5120个NVIDIA CUDA内核和640个NVIDIA Volta Tensor内核。

While it’s not fair to compare these values across generations, due to improvement in chip’s energy efficiency and other features, it’ll give you an indication that they have completely different performance profiles. If you want the best single-GPU training and more GPU memory, your first choice should still be p3.2xlarge. If you want a good GPU for development, prototyping and optimizing models for inference deployment, g4dn.xlarge should be your first choice.

尽管由于提高了芯片的能效和其他功能而在几代人之间比较这些值是不公平的,但它可以向您表明它们具有完全不同的性能。 如果您希望获得最佳的单GPU培训和更多的GPU内存,那么您的首选应该仍然是p3.2xlarge 。 如果您想要一个好的GPU用于开发,原型设计和优化模型以进行推理部署, g4dn.xlarge应该是您的首选。

The following instance sizes all give you access to single NVIDIA T4 GPU with increasing number of vCPUs, system memory, storage and network bandwidth: g4dn.xlarge (4 vCPU, 16 GB system memory), g4dn.2xlarge (8 vCPU, 32 GB system memory), g4dn.4xlarge (16 vCPU, 64 GB system memory), g4dn.8xlarge (32 vCPU, 128 GB system memory), g4dn.16xlarge (64 vCPU, 256 GB system memory). You can find the full list of differences on the product G4 instance page under the Product Details section.

通过以下实例大小,您可以访问具有越来越多的vCPU,系统内存,存储和网络带宽的单个NVIDIA T4 GPU: g4dn.xlarge (4个vCPU,16 GB系统内存), g4dn.2xlarge (8个vCPU,32 GB系统)内存), g4dn.4xlarge (16个vCPU,64 GB系统内存), g4dn.8xlarge (32个vCPU,128 GB系统内存), g4dn.16xlarge (64个vCPU,256 GB系统内存)。 您可以在产品详细信息部分下的产品G4实例页面上找到差异的完整列表。

G4 instance sizes also include two multi-GPU configurations: g4dn.12xlarge with 4 GPUs and g4dn.metal with 8 GPUs. However, if your use case is multi-GPU or multi-node/distributed training, you should consider using P3 instances. Run nvidia-smi topo --matrix on a multi-GPU g4dn.12xlarge instance and you’ll see that the GPUs are not connected by high-bandwidth NVLink GPU interconnect. P3 multi-GPU instances include high-bandwidth NVLink interconnects that can speed up multi-GPU training.

G4实例尺寸还包括两个多GPU配置: g4dn.12xlarge与4个GPU和g4dn.metal与8个GPU。 但是,如果您的用例是多GPU或多节点/分布式培训,则应考虑使用P3实例。 在多GPU g4dn.12xlarge实例上运行nvidia-smi topo --matrix ,您会发现GPU没有通过高带宽NVLink GPU互连连接。 P3多GPU实例包括可加速多GPU训练的高带宽NVLink互连。

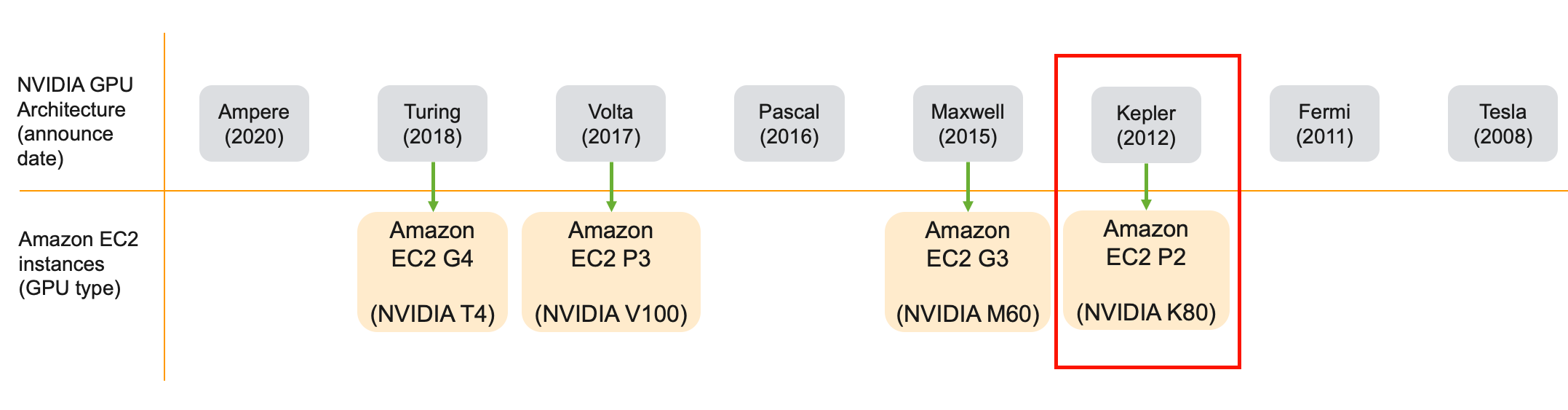

Amazon EC2 P2:具有成本效益的培训和原型制作,但首先考虑G4实例 (Amazon EC2 P2: Cost-effective for training and prototyping, but consider G4 instances first)

P2 instances give you access to NVIDIA K80 GPUs based on the NVIDIA Kepler architecture. Kepler architecture is a few generations old (Kepler -> Maxwell -> Pascal -> Volta -> Turing), therefore they’re not the fastest GPUs around. They do have some specific features such as full precision (FP64) support that makes them attractive and cost-effective for high-performance computing (HPC) workloads that rely on the extra precision. P2 instances come in 3 different sizes: p2.xlarge (1 GPU), p2.8xlarge (8 GPUs), p2.16xlarge (16 GPUs).

P2实例使您可以访问基于NVIDIA Kepler架构的NVIDIA K80 GPU。 开普勒体系结构已经存在了几代(开普勒->麦克斯韦->帕斯卡尔->伏打->图灵),因此它们并不是最快的GPU。 它们确实具有一些特定功能,例如全精度(FP64)支持,这使它们对于依赖超高精度的高性能计算(HPC)工作负载具有吸引力并具有成本效益。 P2实例具有3种不同的大小:p2.xlarge(1个GPU),p2.8xlarge(8个GPU),p2.16xlarge(16个GPU)。

The NVIDIA K80 is an interesting GPU. A single NVIDIA K80 is actually two GPUs on a physical board, which NVIDIA calls dual-GPU design. What this means is that, when you launch an instance of p2.xlarge, you’re only getting one of those two GPUs on a physical K80 board. Similarly, when you launch a p2.8xlarge you’re getting access to eight GPUs on four K80 GPUs, and with p2.16xlarge you’re getting access to sixteen GPUs on eight K80 GPUs. Run nvidia-smi on a p2.xlarge and what you see is one of the two GPUs on an NVIDIA K80 board and it has 12 GB of GPU memory

NVIDIA K80是有趣的GPU。 单个NVIDIA K80实际上是物理板上的两个GPU,NVIDIA称之为双GPU设计。 这意味着,当您启动p2.xlarge实例时,您只会在物理K80板上获得这两个GPU之一。 同样,启动p2.8xlarge您可以访问四个K80 GPU上的八个GPU,而使用p2.16xlarge您可以访问八个K80 GPU上的十六个GPU。 在p2.xlarge上运行nvidia-smi ,您看到的是NVIDIA K80板上的两个GPU之一,它具有12 GB的GPU内存

P2 instance features at a glance:

P2实例功能一目了然:

-

GPU Generation: NVIDIA Kelper

GPU一代 :NVIDIA Kelper

-

Supported precision types: FP64, FP32

支持的精度类型 :FP64,FP32

-

GPU memory: 12 GB

GPU记忆体: 12 GB

-

GPU interconnect: PCIe

GPU互连 :PCIe

因此,您何时应该考虑使用P2实例进行深度学习? (So, when should you consider using P2 instances for deep learning?)

Prior to the launch of Amazon EC2 G4 instances, the P2 instances were the recommended cost-effective deep learning training instance type. Since the launch of G4 instances, I recommend G4 as the go-to cost-effective training and prototyping GPU instance for deep learning training. P2 continues to be cost-effective, but you’ll miss out on several new features such as support for mixed-precision training (Tensor Cores) and reduced precision inference, which have become a standard on newer generations, including the most recently announced NVIDIA Ampere architecture.

在启动Amazon EC2 G4实例之前,P2实例是推荐的具有成本效益的深度学习培训实例类型。 自G4实例启动以来,我建议将G4用作具有成本效益的培训和用于深度学习培训的GPU实例原型。 P2仍然具有成本效益,但您会错过一些新功能,例如对混合精度训练(Tensor Cores)的支持和降低的精度推断,这些已成为新一代的标准,包括最近发布的NVIDIA安培建筑 。

If you run nvidia-smi on the p2.16xlarge GPU instance, since NVIDIA K80 has a dual-GPU design, you’ll see 16 GPUs which are part of 8 NVIDIA K80 GPUs. This is the most number of GPUs you can get on a single instance on AWS. If you runnvidia-smi topo --matrix, you’ll see that the all inter-GPU communications are through PCIe, unlike P3 multi-GPU instances which use the much faster NVLink.

如果在p2.16xlarge GPU实例上运行nvidia-smi ,由于NVIDIA K80具有双GPU设计,您将看到16个GPU,它们属于8个NVIDIA K80 GPU。 这是您可以在AWS的单个实例上获得的最多数量的GPU。 如果运行nvidia-smi topo --matrix ,您会看到所有GPU间通信都是通过PCIe进行的,这与使用更快的NVLink的P3多GPU实例不同。

Amazon EC2 G3:主要用于图形工作负载,对于深度学习而言具有成本效益,但首先考虑P2和G4实例 (Amazon EC2 G3: Primarily for graphics workloads, cost-effective for deep learning, but consider P2 and G4 instances first)

G3 instances give you access to NVIDIA M60 GPUs based on the NVIDIA Maxwell architecture. NVIDIA refers to the M60 GPUs as virtual workstations and positions them for professional graphics, but you can also use them for deep learning. However, with much more powerful and cost-effective options for deep learning with P3 and G4 instances, G3 instances should be your last option for deep learning.

G3实例使您可以访问基于NVIDIA Maxwell架构的NVIDIA M60 GPU。 NVIDIA将M60 GPU称为虚拟工作站,并将其放置在专业图形上,但是您也可以将它们用于深度学习。 但是,通过P3和G4实例进行深度学习的功能更强大且更具成本效益,G3实例应该是深度学习的最后选择。

G3 instance features at a glance:

G3实例功能一目了然:

-

GPU Generation: NVIDIA Maxwell

GPU一代 :NVIDIA Maxwell

-

Supported precision types: FP32

支持的精度类型 :FP32

-

GPU memory: 8 GB

GPU记忆体 :8 GB

-

GPU interconnect: PCIe

GPU互连 :PCIe

您是否应该考虑使用G3实例进行深度学习? (Should you consider G3 instances for deep learning?)

Prior to the launch of Amazon EC2 G4 instances, single GPU G3 instances were cost effective to develop, test and prototype. And although the Maxwell architecture is more recent than NVIDIA K80’s Kepler architectures found on P2 instances, you should still consider P2 instances before G3 for deep learning. Your choice order should be P3 > G4 > P2 > G3.

在启动Amazon EC2 G4实例之前,单个GPU G3实例在开发,测试和原型制作方面具有成本效益。 尽管Maxwell架构比P2实例上的NVIDIA K80的Kepler架构更新,但您仍应在G3之前考虑使用P2实例进行深度学习。 您的选择顺序应为P3> G4> P2> G3。

G3 instances come in 4 sizes, g3s.xlarge and g3.4xlarge (2 GPU, different system configuration) g3.8xlarge (2 GPUs) and g3.16xlarge (4 GPUs). Run nvidia-smi on a g3s.xlarge and you’ll see that this instances gives you access to an NVIDIA M60 GPU with 8 GB GPU memory.

G3实例有4种大小,分别为g3s.xlarge和g3.4xlarge (2个GPU,不同的系统配置), g3.8xlarge (2个GPU)和g3.16xlarge (4个GPU)。 在g3s.xlarge上运行nvidia-smi ,您将看到该实例使您可以访问具有8 GB GPU内存的NVIDIA M60 GPU。

即将出现-基于NVIDIA Ampere架构的NVIDIA A100 GPU (On the horizon — NVIDIA A100 GPU based on the NVIDIA Ampere Architecture)

In May 2020 NVIDIA announced its new GPU architecture called NVIDIA Ampere Architecture, and the NVIDIA A100 Tensor Core GPU based on it. AWS also announced that it plans to offer Amazon EC2 instances based on the NVIDIA A100 GPUs.

2020年5月,NVIDIA宣布了其新的GPU架构,称为NVIDIA Ampere Architecture,以及基于该架构的NVIDIA A100 Tensor Core GPU。 AWS还宣布计划提供基于NVIDIA A100 GPU的Amazon EC2实例 。

In their developer blog post, NVIDIA discusses the key capabilities of the A100 GPU, which I encourage you to read if you’re interested in the world of GPUs and deep learning as I am. At a quick glance, A100 introduces new precision types: Bfloat16 (BF16), and NVIDIA’s TensorFloat-32 (TF32), GPU sharing with Multi-Instance GPU (MIG), faster NVLink for faster distributed training, and a host of other interesting features. I’m really excited about the NVIDIA A100 GPUs and can’t wait to take it out for a spin when it becomes available on AWS.

NVIDIA在他们的开发人员博客中讨论了A100 GPU的关键功能,如果您对GPU和深度学习领域感兴趣,我鼓励您阅读。 快速浏览,A100引入了新的精度类型:Bfloat16(BF16)和NVIDIA的TensorFloat-32(TF32),与多实例GPU(MIG)共享的GPU,更快的NVLink用于更快的分布式训练以及许多其他有趣的功能。 我对NVIDIA A100 GPU感到非常兴奋,当它在AWS上可用时,迫不及待想要试用一下。

我在AWS上还有哪些其他加速器选项? (What other accelerator options do I have on AWS?)

NVIDIA GPUs are no doubt a staple for deep learning training and inference. In some cases, such as inference deployments, a full GPU instance may be overkill for serving your model, and a CPU-only solution may not be performant enough. If you’re trying to optimize your cost/inference, you have to choose the lowest cost option that delivers the best inference throughput, under your desired latency for your deep learning model.

毫无疑问,NVIDIA GPU是深度学习培训和推理的重要组成部分。 在某些情况下(例如推理部署),完整的GPU实例可能无法为模型提供服务,并且仅CPU的解决方案可能性能不足。 如果您要优化成本/推理,则必须选择深度学习模型所需延迟下的,提供最佳推理吞吐量的最低成本选项。

On AWS today, you have four different hardware options for inference deployments. You are already be familiar with the two common options — compute optimized CPU instances such as the C5 family of instances, and high-performance GPU options such as P3 and G4 family of instances.

在当今的AWS上,您有四个不同的硬件选项可用于推理部署。 您已经熟悉两个常用选项-计算优化的CPU实例(例如C5系列实例)和高性能GPU选项(例如P3和G4系列实例)。

Below, I’ll discuss the two other accelerator options below — Amazon Elastic Inference and AWS Inferential — and when you should consider them.

下面,我将在下面讨论其他两个加速器选项-Amazon Elastic Inference和AWS Inferential-以及何时考虑使用它们。

亚马逊弹性推理 (Amazon Elastic Inference)

With Amazon Elastic Inference (EI), rather than selecting a GPU instance for hosting your models, you can attach a low-cost GPU powered acceleration to a CPU-only instance via the network.

借助Amazon Elastic Inference(EI) ,您可以通过网络将低成本的GPU驱动的加速附加到仅CPU的实例,而无需选择用于托管模型的GPU实例。

EI comes in different sizes, you can add just the amount of GPU processing power you need for your model. Since the GPU acceleration is accessible via the network, EI adds some latency compared to a native GPU in say a G4 instance, but will still be much faster than a CPU-only instance if you have a demanding model.

EI有不同的尺寸,您可以仅添加模型所需的GPU处理能力。 由于可以通过网络访问GPU加速,因此与G4实例中的本机GPU相比,EI会增加一些延迟,但是如果您有苛刻的模型,EI仍然比仅CPU实例要快得多。

It’s therefore important to define your target latency SLA for your application and work backwards to choose the right accelerator option. Let’s consider a hypothetical scenario below.

因此,重要的是为应用程序定义目标延迟SLA,并进行反向工作以选择正确的加速器选项。 让我们考虑下面的假设情况。

Please note all these numbers in the hypothetical scenario are made-up for the purpose of illustration. Every use case is different. Use the scenario only for general guidance.

请注意,假设情况下的所有这些数字都是出于说明目的而编造的。 每个用例都是不同的。 仅将方案用作一般指导。

Let’s say your application can deliver a good customer experience if your total latency (app + network + model predictions) is under 200 ms. And let’s say, with a G4 instance type you can get total latency down to 40 ms which is well within your target latency. Also, let’s say with a C5 instance type you can only get total latency to 400 ms which does not meet your SLA requirements and results in poor customer experience.

假设您的总延迟(应用程序+网络+模型预测)小于200毫秒,则您的应用程序可以提供良好的客户体验。 假设使用G4实例类型,您可以将总延迟降低到40毫秒,这完全在目标延迟范围之内。 另外,假设使用C5实例类型,您只能将总延迟设为400毫秒,这不符合您的SLA要求,并导致不良的客户体验。

With Elastic Inference, you can network attach a “slice” or a “fraction” of a GPU to a CPU instances such as a C5 instance, and get your total latency down to, say 180 ms which is under the desired 200 ms mark. Since EI is significantly cheaper than provisioning a dedicated GPU instance, you save on your total deployment costs. A GPU instance like G4 will still deliver best inference performance, but if the extra performance doesn’t improve your customer experience, you can use EI to stay under the target latency SLA, deliver good customer experience and save on overall deployment costs.

借助Elastic Inference,您可以将GPU的“切片”或“部分”网络连接到CPU实例(例如C5实例),并使总延迟降低到180毫秒,这低于所需的200毫秒。 由于EI比配置专用GPU实例便宜得多,因此可以节省总部署成本。 像G4这样的GPU实例仍将提供最佳的推理性能,但是如果额外的性能不能改善您的客户体验,则可以使用EI保持在目标延迟SLA以下,提供良好的客户体验并节省总体部署成本。

AWS Inferentia和Amazon EC2 Inf1实例 (AWS Inferentia and Amazon EC2 Inf1 Instances)

Amazon EC2 Inf1 is the new kid in the block. Inf1 instances give you access to a high performance inference chip called AWS Inferentia, custom designed by AWS. AWS Inferentia chips support FP16, BF16 and INT8 for reduced precision inference. To target AWS Inferentia, you can use the AWS Neuron software development kit (SDK) to compile your TensorFlow, PyTorch or MXNet model. The SDK also comes with a runtime library to run the compiled models in production.

Amazon EC2 Inf1是新手。 Inf1实例使您可以访问由AWS定制设计的高性能推理芯片AWS Inferentia。 AWS Inferentia芯片支持FP16,BF16和INT8,以降低精度推理。 要定位AWS Inferentia,您可以使用AWS Neuron软件开发工具包(SDK)来编译TensorFlow,PyTorch或MXNet模型。 该SDK还附带一个运行时库,用于在生产环境中运行已编译的模型。

Amazon EC2 Inf1 instances deliver better performance/cost compared to GPU EC2 instances. You’ll just need to make sure that the AWS Neuron SDK supports all layers in your model. See here for a list of supported Ops for each framework.

与GPU EC2实例相比,Amazon EC2 Inf1实例提供了更好的性能/成本。 您只需要确保AWS Neuron SDK支持模型中的所有层即可。 有关每个框架支持的操作的列表,请参见此处。

成本优化 (Optimizing for cost)

You have a few different options to optimize the cost of your training and inference workloads.

您有几种不同的选择来优化训练和推理工作量的成本。

Spot instances: Spot-instance pricing makes high-performance GPUs much more affordable and allows you to access spare Amazon EC2 compute capacity at a steep discount compared to on-demand rates. For an up-to-date list of prices by instance and Region, visit the Spot Instance Advisor. In some cases you can save over 90% on your training costs, but your instances can be preempted and be terminated with just 2 mins notice. Your training scripts must implement frequent checkpointing and ability to resume training once Spot capacity is restored.

现货情况:现货实例的价格使得高性能的GPU更实惠,让您以极低的折扣备用访问Amazon EC2的计算能力相比,点播率。 有关按实例和地区的最新价格列表,请访问竞价型实例顾问 。 在某些情况下,您可以节省90%以上的培训费用,但是您的实例可以被抢占,并且仅需2分钟通知即可终止。 您的训练脚本必须实施频繁的检查点,并且一旦恢复点容量就可以恢复训练。

Amazon SageMaker managed training: During the development phase much of your time is spent prototyping, tweaking code and trying different options in your favorite editor or IDE (which is obvious VIM) — all of which don’t need a GPU. You can save costs by simply decoupling your development and training resources and Amazon SageMaker will let you do this easily. Using the Amazon SageMaker Python SDK you can test your scripts locally on your laptop, desktop, EC2 instance or SageMaker notebook instance.

Amazon SageMaker管理的培训:在开发阶段,您花费了很多时间进行原型设计,调整代码并在您喜欢的编辑器或IDE(很明显是VIM)中尝试不同的选项-所有这些都不需要GPU。 您只需将开发和培训资源脱钩即可节省成本,Amazon SageMaker可以让您轻松地做到这一点。 使用Amazon SageMaker Python SDK,您可以在笔记本电脑,台式机,EC2实例或SageMaker笔记本电脑实例上本地测试脚本。

When you’re ready to train, specify what GPU instance type you want to train on and SageMaker will provision the instances, copy the dataset to the instance, train your model, copy results back to Amazon S3, and tear down the instance. You are only billed for the exact duration of training. Amazon SageMaker also supports managed Spot Training for additional convenience and cost savings.

当您准备训练时,指定要在其上训练的GPU实例类型,SageMaker将提供实例,将数据集复制到实例,训练模型,将结果复制回Amazon S3,然后拆除实例。 您只需要支付培训的确切时间。 Amazon SageMaker还支持托管的现场培训,以提供更多的便利和成本节省。

I’ve written a guide on how to use it here: A quick guide to using Spot instances with Amazon SageMaker

我已经在此处编写了有关如何使用它的指南 : 将Spot实例与Amazon SageMaker结合使用的快速指南

Amazon Elastic Inference and Amazon Inf1 instance: Save costs for inference workloads by leveraging EI to add just the right amount of GPU acceleration to your CPU instances, or by leveraging cost effective Amazon Inf1 instances.

Amazon Elastic Inference和Amazon Inf1实例:通过利用EI向您的CPU实例中添加适量的GPU加速,或者通过利用具有成本效益的Amazon Inf1实例,可以节省推理工作量的成本。

通过提高利用率来优化成本: (Optimize for cost by improving utilization:)

-

Optimize your training code to take full advantage of P3 and G4 instances Tensor Cores by enabling mixed-precision training. Every deep learning framework does this differently and you’ll have to refer to the specific framework’s documentation.

通过启用混合精度训练,优化训练代码以充分利用P3和G4实例Tensor Core。 每个深度学习框架的执行方式都不同,您必须参考特定框架的文档。

-

Use reduce precision (INT8) inference on G4 instance types to improve performance. NVIDIA’s TensorRT library provides APIs to convert single precision models to INT8, and provides examples in their documentation.

对G4实例类型使用降低精度(INT8)推断可提高性能。 NVIDIA的TensorRT库提供了将单精度模型转换为INT8的API,并在其文档中提供了示例 。

我应该在Amazon EC2 GPU实例上使用什么软件? (What software should I use on Amazon EC2 GPU instances?)

Without optimized software, there is a risk that you’ll under-utilize the hardware resources you provision. You may be tempted to “pip install tensorflow/pytorch”, but I highly recommend using AWS Deep Learning AMIs or AWS Deep Learning Containers (DLC) instead.

如果没有优化的软件,就有可能无法充分利用您提供的硬件资源。 您可能很想“ pip install tensorflow / pytorch”,但是我强烈建议您改用AWS Deep Learning AMI或AWS Deep Learning容器(DLC) 。

AWS qualifies and tests them on all Amazon EC2 GPU instances, and they include AWS optimizations for networking, storage access and the latest NVIDIA and Intel drivers and libraries. Deep learning frameworks have upstream and downstream dependencies on higher level schedulers and orchestrators and lower-level infrastructure services. By using AWS AMIs and AWS DLCs you know it’s been tested end-to-end and is guaranteed to give you the best performance.

AWS可以在所有Amazon EC2 GPU实例上对它们进行鉴定和测试,并且包括针对网络,存储访问以及最新的NVIDIA和Intel驱动程序和库的AWS优化。 深度学习框架在较高级别的调度程序,协调器和较低级别的基础结构服务上具有上游和下游依赖性。 通过使用AWS AMI和AWS DLC,您知道它已经过端到端测试,并且可以保证为您带来最佳性能。

TL; DR (TL;DR)

Congratulations! You made it to the end (even if you didn’t read all of the post). I intended this to be a quick guide, but also provide enough context, references and links to learn more. In this final section, I’m going to give you the quick recommendation list. Please take time to read about the specific instance type and GPU type you’re considering to make an informed decision.

恭喜你! 您做到了最后(即使您没有阅读所有文章)。 我打算将其作为快速指南,但也提供足够的上下文,参考和链接以了解更多信息。 在最后一部分中,我将为您提供快速推荐列表。 请花些时间阅读您正在考虑做出明智决定的特定实例类型和GPU类型。

The recommendation below is very much my personal opinion based on my experience working with GPUs and deep learning. Caveat emptor.

根据我在GPU和深度学习方面的经验,以下建议很大程度上是我的个人看法。 买者自负。

And (drum roll) here’s the list:

(鼓),这是列表:

-

Highest performing GPU instance on AWS. Period:

p3dn.24xlargeAWS上性能最高的GPU实例。 期间 :

p3dn.24xlarge -

Best single GPU training performance:

p3.2xlarge(V100, 16 GB GPU)最佳单个GPU训练性能 :

p3.2xlarge(p3.2xlargeGB GPU) -

Best single-GPU instance for developing, testing and prototyping:

g4dn.xlarge(T4, 16 GB GPU). Considerg4dn.(2/4/8/16)xlargefor more vCPUs and higher system memory.最佳的单GPU实例,用于开发,测试和原型制作 :

g4dn.xlarge(g4dn.xlargeGB GPU)。 对于更多的vCPU和更高的系统内存,请考虑使用g4dn.(2/4/8/16)xlarge。 -

Best multi-GPU instance for single node training and running parallel experiments:

p3.8xlarge(4 V100 GPUs, 16 GB per GPU),p3.16xlarge(8 GPUs, 16 GB per GPU)适用于单节点训练和并行实验的最佳多GPU实例 :

p3.8xlarge(4个V100 GPU,每个GPU 16 GB),p3.16xlarge(8个GPU,每个GPU 16GB) -

Best multi-GPU, multi-node distributed training performance:

p3dn.24xlarge(8 V100 GPUs, 32 GB per GPU, 100 Gbps aggregate network bandwidth)最佳的多GPU,多节点分布式培训性能 :

p3dn.24xlarge(8个V100 GPU,每个GPU 32 GB,100 Gbps聚合网络带宽) -

Best single-GPU instance for inference deployments: G4 instance type. Choose instance size

g4dn.(2/4/8/16)xlargebased on pre- and post-processing steps in your deployed application.推理部署的最佳单GPU实例 :G4实例类型。 根据部署的应用程序中的预处理和后处理步骤,选择实例大小

g4dn.(2/4/8/16)xlarge。 -

I need the most GPU memory I can get for large models:

p3dn.24xlarge(8 V100, 32 GB per GPU)我需要大型模型可以使用的最大GPU内存 :

p3dn.24xlarge(8 V100,每个GPU 32 GB) -

I need access to Tensor Cores for mixed-precision training: P3 and G4 instance types. Choose the instance size based on your model size and application.

我需要使用Tensor Cores进行混合精度培训 :P3和G4实例类型。 根据您的模型大小和应用程序选择实例大小。

-

I need access to double precision (FP64) for HPC and deep learning: P3, P2 instance types. Choose the instance size based on your application.

我需要访问用于HPC和深度学习的双精度(FP64) :P3,P2实例类型。 根据您的应用程序选择实例大小。

-

I need 8 bit integer precision (INT8) for inference: G4 instance type. Choose instance size based on pre- and post-processing steps in your deployed application.

我需要8位整数精度(INT8)进行推断 :G4实例类型。 根据部署的应用程序中的预处理和后处理步骤选择实例大小。

-

I need access to half precision (FP16) for inference: P3, G4 instance type. Choose the instance size based on your application.

我需要使用半精度(FP16)进行推理 :P3,G4实例类型。 根据您的应用程序选择实例大小。

-

I want GPU acceleration for inference but don’t need a full GPU: Use Amazon Elastic Inference and attach just the right amount of GPU acceleration you need.

我需要GPU加速进行推理,但不需要完整的GPU :使用Amazon Elastic Inference并附加所需数量的GPU加速。

-

I want the best performance on any GPU instance: Use AWS Deep Learning AMI and AWS Deep Learning Containers

我希望在任何GPU实例上都能获得最佳性能 :使用AWS Deep Learning AMI和AWS Deep Learning容器

-

I want to save money: Use Spot Instances and Managed Spot Training on Amazon SageMaker. Choose Amazon Elastic Inference for models that don’t take advantage of a full GPU.

我想省钱 :在Amazon SageMaker上使用竞价型实例和托管竞价型培训。 对于不利用完整GPU的模型,请选择Amazon Elastic Inference。

Thank you for reading. If you found this article interesting, please check out my other blog posts on medium or follow me on twitter (@shshnkp), LinkedIn or leave a comment below. Want me to write on a specific machine learning topic? I’d love to hear from you!

感谢您的阅读。 如果您发现本文有趣,请在媒体上查看我的其他博客文章,或在LinkedIn ( @shshnkp ), LinkedIn上关注我,或在下面发表评论。 要我写一个特定的机器学习主题吗? 我希望收到您的来信!

翻译自: https://towardsdatascience.com/choosing-the-right-gpu-for-deep-learning-on-aws-d69c157d8c86

aws搭建深度学习gpu

支付宝扫一扫

支付宝扫一扫 微信扫一扫

微信扫一扫